CKA学习纲要-基础部署和POD的管理 | Word Count: 4.9k | Reading Time: 25mins | Post Views:

部署、配置和验证 环境

部署环境

主机

角色

IP

k8smaster

Master

192.168.10.120

k8snode1

Node

192.168.10.121

k8snode2

Node

192.168.10.122

版本信息

程序

版本号

Linux

Rockylinux 9.3

Kubernetes

1.28.2

Docker

24.0.7

Cri-Docker

0.3.8

部署

# 所有节点主机配置免密登录 # 提前下载相应yaml文件和镜像或者科学上网开启全局模式

# 关闭防火墙 systemctl disable --now firewalld # 关闭selinux sed -i 's/enforceing/disabled' /etc/selinux/config # 关闭SWAP swapoff -a ; sed -i '/swap/d' /etc/fstab # 修改内核加载模块 cat > /etc/modules-load.d/containerd.conf <<EOF overlay br_netfilter EOF # 加载模块 modprobe br_netfilter cat > /etc/sysctl.conf <<EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF sysctl -p # 添加软件源信息 dnf config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo # 更新并安装Docker-CE dnf makecache dnf install -y docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin git # 安装并锁定版本 dnf install -y python3-dnf-plugin-versionlock dnf versionlock add docker-ce # 配置加速源 mkdir -p /etc/docker tee /etc/docker/daemon.json <<-'EOF' { "group": "docker", "registry-mirrors": ["https://37y8py0j.mirror.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"] } EOF # 重新加载并配置开机启动 systemctl daemon-reload systemctl enable --now docker docker pull registry.aliyuncs.com/google_containers/pause:3.9 docker pull registry.aliyuncs.com/google_containers/coredns:v1.10.1 docker tag registry.aliyuncs.com/google_containers/coredns:v1.10.1 registry.aliyuncs.com/google_containers/coredns/coredns:v1.10.1

安装cri-docker wget https://codeload.github.com/Mirantis/cri-dockerd/tar.gz/refs/tags/v0.3.8 tar xf cri-dockerd-0.3.8.amd64.tgz cp cri-dockerd/cri-dockerd /usr/bin/ chmod +x /usr/bin/cri-dockerd # 生成启动服务文件 cat > /usr/lib/systemd/system/cri-docker.service <<EOF [Unit] Description=CRI Interface for Docker Application Container Engine Documentation=https://docs.mirantis.com After=network-online.target firewalld.service docker.service Wants=network-online.target Requires=cri-docker.socket [Service] Type=notify ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd:// --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 ExecReload=/bin/kill -s HUP $MAINPID TimeoutSec=0 RestartSec=2 Restart=always StartLimitBurst=3 StartLimitInterval=60s LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TasksMax=infinity Delegate=yes KillMode=process [Install] WantedBy=multi-user.target EOF # 生成socket文件 cat > /usr/lib/systemd/system/cri-docker.socket <<EOF [Unit] Description=CRI Docker Socket for the API PartOf=cri-docker.service [Socket] ListenStream=%t/cri-dockerd.sock SocketMode=0660 SocketUser=root SocketGroup=docker [Install] WantedBy=sockets.target EOF # 启动服务 systemctl daemon-reload systemctl enable cri-docker --now systemctl is-active cri-docker

部署Kube三件套 # 所有主机部署 cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF cat > /etc/sysconfig/kubelet <<EOF KUBELET_EXTRA_ARGS="--cgroup-driver=systemd" EOF dnf makecache dnf install -y kubelet-1.27.6-0 kubeadm-1.27.6-0 kubectl-1.27.6-0 dnf versionlock add kubeadm kubectl kubelet systemctl enable --now kubelet

部署Master节点 # 拉取部署镜像 kubeadm config images pull --cri-socket unix:///var/run/cri-dockerd.sock --kubernetes-version v1.27.6 --image-repository=registry.aliyuncs.com/google_containers # 部署Master节点 kubeadm init --image-repository=registry.aliyuncs.com/google_containers \ --kubernetes-version v1.27.6 \ --pod-network-cidr=10.244.0.0/16 \ --service-cidr=10.96.0.0/12 \ --cri-socket=unix:///var/run/cri-dockerd.sock \ --ignore-preflight-errors=Swap \ --v=5 # 完成kubectl的命令补全 dnf install -y bash-completion bash-completion-extras source /usr/share/bash-completion/bash_completion # 全局生效 kubectl completion bash >/etc/bash_completion.d/kubectl echo 'source <(kubectl completion bash)' >/etc/profile.d/k8s.sh && source /etc/profile # 部署calico wget https://docs.projectcalico.org/v3.25/manifests/calico.yaml --no-check-certificate # 修改IPV4POOL_CIDR的值为10.244.0.0/16 - name: CALICO_IPV4POOL_CIDR value: "10.244.0.0/16" kubectl apply -f calico.yaml

添加Node节点 # 在node节点上执行 systemctl enable --now docker.service cri-docker.socket kubeadm join 192.168.10.120:6443 --token pm6d0f.u0mtmea3xxgzg7i6 \ --discovery-token-ca-cert-hash sha256:1f11ed30db531bfc0ab181a15d9a8875d48440e9041a48f98d78f2e6d165b995 \ --cri-socket=unix:///var/run/cri-dockerd.sock \ --v=5

配置 配置工具 # 部署Helm curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 chmod 700 get_helm.sh sh get_helm.sh helm version # 添加helm源 helm repo add stable https://charts.helm.sh/stable helm repo update # 配置helm的自动补全 helm completion bash > ~/.helmrc echo "source ~/.helmrc" >> ~/.bashrc # 安装kubens,用于切换命名空间 curl -L https://github.com/ahmetb/kubectx/releases/download/v0.9.1/kubens -o /usr/local/bin/kubens chmod +x /usr/local/bin/kubens # 执行环境变量 source ~/.bashrc

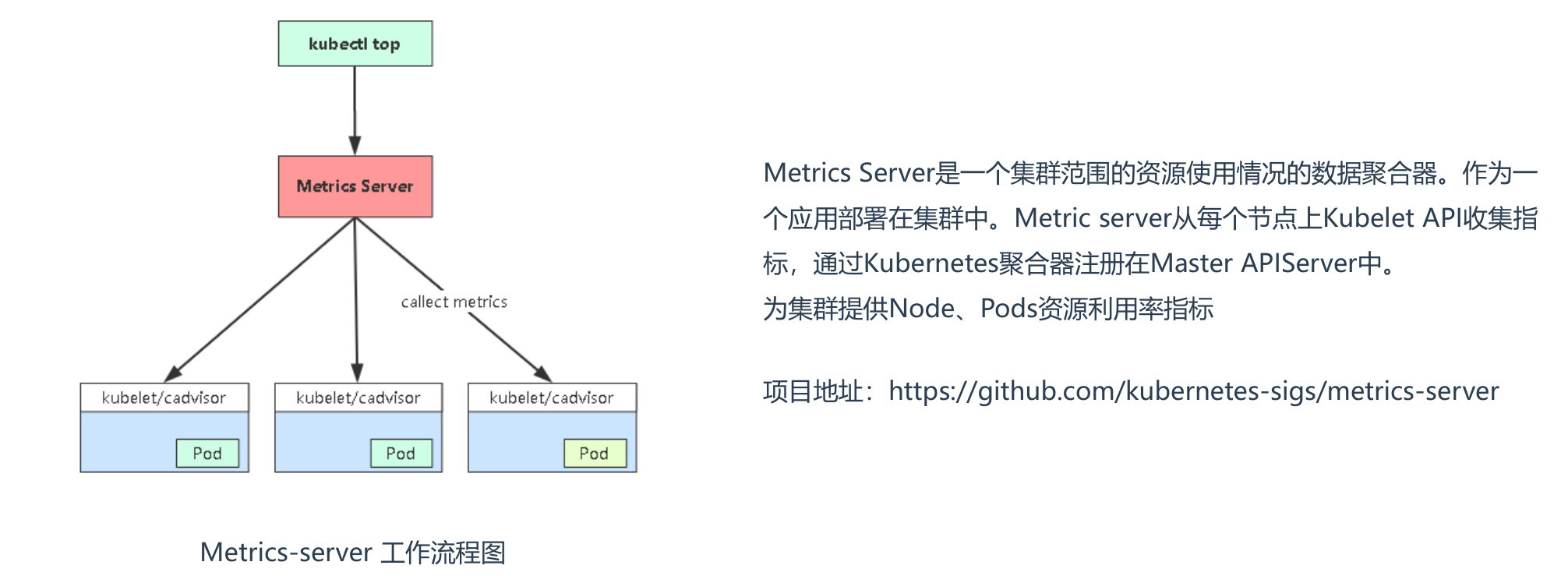

性能度量

# metric-server工作流程 # kubectl top -> apiserver -> metric-server(pod) -> kubelet(cadvisor) # 在所有节点上拉取监控服务器镜像 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.4 # 下载配置文件 wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.4/components.yaml # 修改配置文件 vim components.yaml …… containers: - args: - --cert-dir=/tmp - --secure-port=443 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --kubelet-use-node-status-port - --metric-resolution=15s - --kubelet-insecure-tls #使用http而非默认https,否则会出现探针无法找到的500错误 image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.4 #修改为国内镜像 …… # 检查安装状态 kubectl apply -f components.yaml kubectl get apiservices |grep metrics kubectl get --raw /apis/metrics.k8s.io/v1beta1/nodes # 查看监控情况 [root@k8smaster ~]# kubectl top nodes NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% k8smaster 179m 8% 2341Mi 66% k8snode1 73m 3% 1278Mi 36% k8snode2 80m 4% 1272Mi 36% [root@k8smaster ~]# kubectl top pods NAME CPU(cores) MEMORY(bytes) whoami-688496c9b7-5r45m 0m 2Mi whoami-688496c9b7-bt8mj 0m 2Mi whoami-688496c9b7-ffwps 0m 2Mi

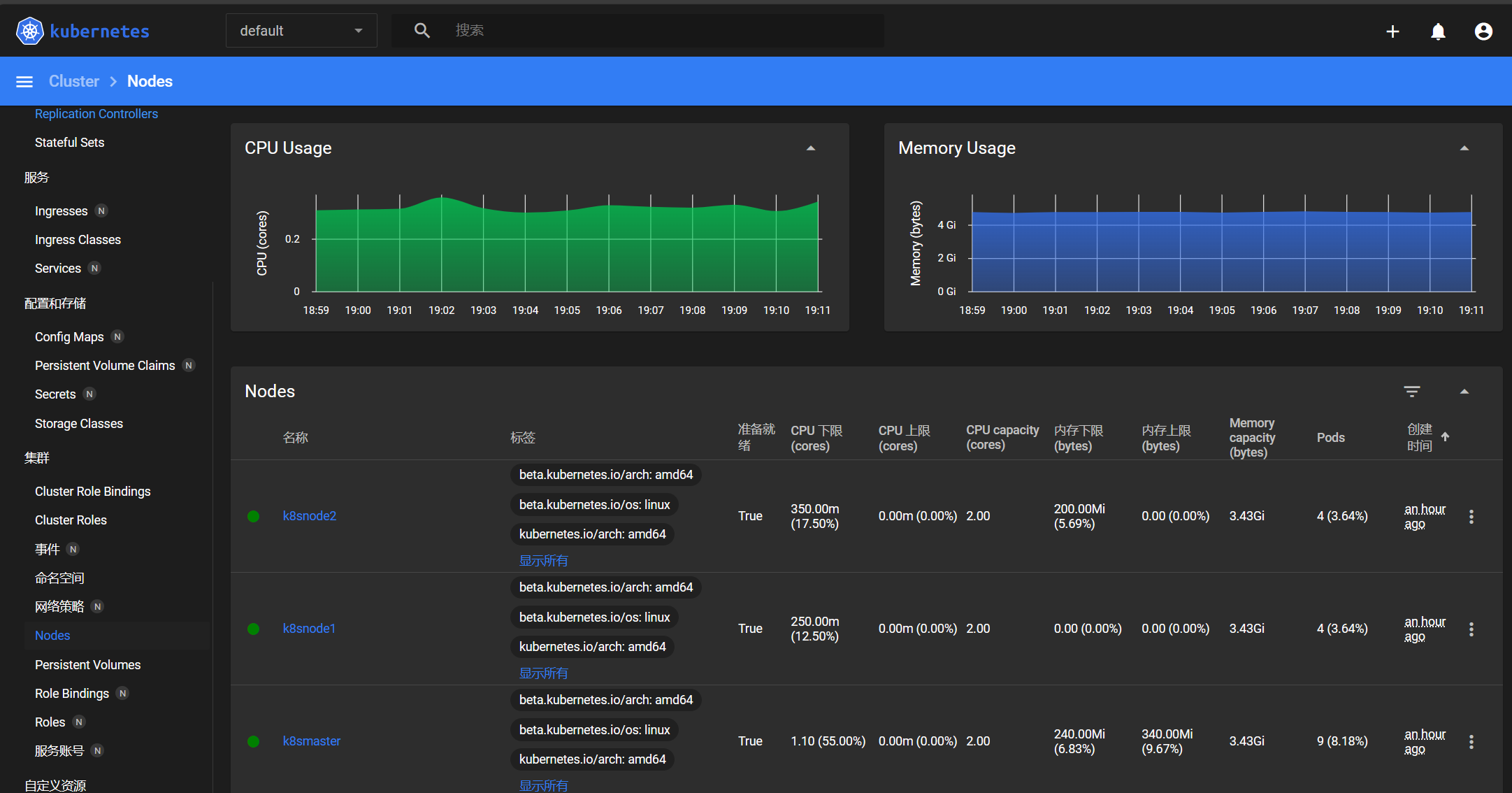

管理面板 面板的部署 # 下载部署文件 wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml cp recommended.yaml k8sdashbord.yaml # 拉取镜像 docker pull docker.io/kubernetesui/dashboard:v2.7.0 docker pull docker.io/kubernetesui/metrics-scraper:v1.0.8 sed -i 's|kubernetesui/dashboard:v2.7.0|docker.io/kubernetesui/dashboard:v2.7.0|' k8sdashbord.yaml sed -i 's|kubernetesui/metrics-scraper:v1.0.8|docker.io/kubernetesui/metrics-scraper:v1.0.8|' k8sdashbord.yaml # 添加端口 …… kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: type: NodePort #增加NodePort字段 ports: - port: 443 targetPort: 8443 nodePort: 30003 # 增加30003端口映射 selector: k8s-app: kubernetes-dashboard …… # 部署 kubectl apply -f k8sdashbord.yaml [root@k8smaster ~]# kubectl get pod -n kubernetes-dashboard NAME READY STATUS RESTARTS AGE dashboard-metrics-scraper-54d78c4b4b-8vjrd 1/1 Running 0 9m6s kubernetes-dashboard-55664676d-x6c8s 1/1 Running 0 9m6s

用户的创建 # 创建管理员用户root [root@k8smaster ~]# cat creat-admin-user.yaml apiVersion: v1 kind: ServiceAccount metadata: name: root namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: root roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: root namespace: kubernetes-dashboard [root@k8smaster ~]# kubectl apply -f creat-admin-user.yaml serviceaccount/root created clusterrolebinding.rbac.authorization.k8s.io/root created ```` #  ```shell # 创建永久Token [root@k8smaster ~]# cat root-user-secret.yaml apiVersion: v1 kind: Secret metadata: name: root-user-secret namespace: kubernetes-dashboard annotations: kubernetes.io/service-account.name: root type: kubernetes.io/service-account-token [root@k8smaster ~]# kubectl apply -f root-user-secret.yaml secret/root-user-secret created # 面板的用户secret [root@k8smaster ~]# kubectl get secret -n kubernetes-dashboard NAME TYPE DATA AGE kubernetes-dashboard-certs Opaque 0 17m kubernetes-dashboard-csrf Opaque 1 17m kubernetes-dashboard-key-holder Opaque 2 17m root-user-secret kubernetes.io/service-account-token 3 46s # 查看secret的值 [root@k8smaster ~]# kubectl get secret root-user-secret -n kubernetes-dashboard -o jsonpath={".data.token"} | base64 -d eyJhbGciOiJSUzI1NiIsImtpZCI6InVZZE4tbldGT1QzZFAtNHN5T0tCMXJOZUxWbVE5Ql8zMlRfUGQ5OTNXeE0ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJyb290LXVzZXItc2VjcmV0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6InJvb3QiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIwNGYzNmEwYi04M2ZjLTQ1YjItOGUxNS0yM2Y5MTJjYTcwNmEiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6cm9vdCJ9.XAnJBaIwRcbC0JhjvNx7o8fmBtcL67JiwLPOh-oSPjVIOKvEW7Ltc-PQJB0BkJo5xQtNknDFSKXOXr3HJOFUy4dovVRAFhpozD8Fg-IlBE3faMAOpFv-sG44mEi-4k4r37W95Eh9InQ-yHogw_39dsA6qk58FzClpUtCgbZBNM8o3VOYJ0sam2WAZmuIJN8a1iGr1mci59a2YScgV-LPHkardUpuQvFzRwN0XGxNKYLgPclmjvr-nigNLcJSCvhBwqJU-35NeZDEK8XCNG_I8hYzPvr1_XJCsHScAd-GyOHby7vguhTli8c8IpqlF0A76F8Dd7FPPldZworDrbUIBA

监控与日志管理 查看资源集群状态 # 查看master组件状态: kubectl get cs # 查看node状态: kubectl get node # 查看资源的详细: kubectl describe <资源类型> <资源名称> # 查看资源信息: kubectl get <资源类型> <资源名称> # -o wide、-o yaml # 查看Node资源消耗: kubectl top node <node name> # 查看Pod资源消耗: kubectl top pod <pod name>

查看资源日志 # systemd守护进程管理的组件: journalctl -u kubelet # Pod部署的组件: kubectl logs kube-proxy-btz4p -n kube-system # 系统日志: /var/log/messages # 查看容器标准输出日志: kubectl logs <Pod名称> kubectl logs -f <Pod名称> [root@k8smaster ~]# kubectl logs -n default whoami-688496c9b7-5r45m 2023/12/21 07:39:24 Starting up on port 80 # 日志流程 kubectl logs -> apiserver -> kubelet -> docker(标准输出被接管写到指定文件中) # 标准输出在宿主机的路径: /var/lib/docker/containers/<container-id>/<container-id>-json.log # 日志文件,进入到终端日志目录查看: kubectl exec -it <Pod名称> -- bash

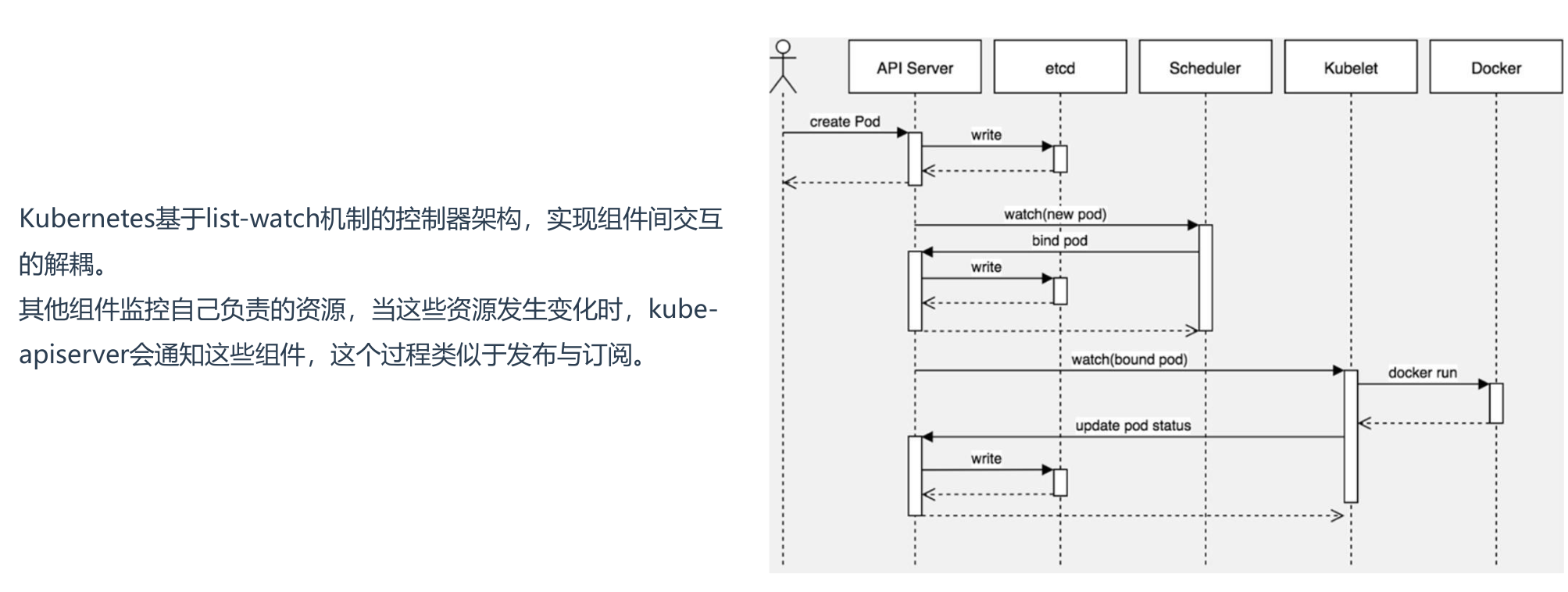

应用程序生命周期管理 应用部署流程 # 使用Deployment控制器部署镜像 [root@k8smaster ~]# kubectl create deployment whoami --image=traefik/whoami --replicas=3 deployment.apps/whoami created # 查看发布控制器和pod [root@k8smaster ~]# kubectl get deployments.apps,pods NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/whoami 3/3 3 3 42s NAME READY STATUS RESTARTS AGE pod/whoami-6f57d5d6b5-8tx59 1/1 Running 0 42s pod/whoami-6f57d5d6b5-bt6dj 1/1 Running 0 42s pod/whoami-6f57d5d6b5-h8j5w 1/1 Running 0 42s # 使用service发布pod [root@k8smaster ~]# kubectl expose deployment whoami --port=80 --type=NodePort --target-port=80 --name=web service/web exposed [root@k8smaster ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 25h web NodePort 10.111.8.67 <none> 80:30045/TCP 7s # 检查发布内容 [root@k8smaster ~]# curl 192.168.10.121:31000 Hostname: whoami-6f57d5d6b5-h8j5w IP: 127.0.0.1 IP: 10.244.249.9 RemoteAddr: 192.168.10.121:35831 GET / HTTP/1.1 Host: 192.168.10.121:31000 User-Agent: curl/7.76.1 Accept: */* [root@k8smaster ~]# curl 192.168.10.122:31000 Hostname: whoami-6f57d5d6b5-h8j5w IP: 127.0.0.1 IP: 10.244.249.9 RemoteAddr: 10.244.185.192:35882 GET / HTTP/1.1 Host: 192.168.10.122:31000 User-Agent: curl/7.76.1 Accept: */* # 删除部署资源 [root@k8smaster ~]# kubectl delete deploy whoami [root@k8smaster ~]# kubectl delete svc web

服务编排 YAML语法 • 缩进表示层级关系 • 不支持制表符“tab”缩进,使用空格缩进 • 通常开头缩进 2 个空格 • 字符后缩进 1 个空格,如冒号、逗号等 • “---” 表示YAML格式,一个文件的开始 • “#”注释

使用YAML创建资源对象 # Yaml配置文件 [root@k8smaster ~]# cat dep-whoami.yaml apiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: whoami name: whoami spec: replicas: 3 selector: matchLabels: app: whoami strategy: {} template: metadata: creationTimestamp: null labels: app: whoami spec: containers: - image: traefik/whoami imagePullPolicy: IfNotPresent name: whoami resources: {} status: {} [root@k8smaster ~]# cat svc-web.yaml apiVersion: v1 kind: Service metadata: creationTimestamp: null labels: app: whoami name: web spec: ports: - port: 80 protocol: TCP targetPort: 80 selector: app: whoami type: NodePort status: loadBalancer: {} # 使用yaml创建资源对象 [root@k8smaster ~]# kubectl apply -f dep-whoami.yaml deployment.apps/whoami created [root@k8smaster ~]# kubectl apply -f svc-web.yaml service/web created [root@k8smaster ~]# kubectl get deployments.apps,pod NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/whoami 3/3 3 3 41s NAME READY STATUS RESTARTS AGE pod/whoami-688496c9b7-2b7lh 1/1 Running 0 41s pod/whoami-688496c9b7-h6j6w 1/1 Running 0 41s pod/whoami-688496c9b7-jjv7d 1/1 Running 0 41s [root@k8smaster ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 26h web NodePort 10.98.151.125 <none> 80:30871/TCP 29s [root@k8smaster ~]# curl 192.168.10.122:30871 Hostname: whoami-688496c9b7-h6j6w IP: 127.0.0.1 IP: 10.244.185.200 RemoteAddr: 192.168.10.122:7256 GET / HTTP/1.1 Host: 192.168.10.122:30871 User-Agent: curl/7.76.1 Accept: */* # 删除资源对象 [root@k8smaster ~]# kubectl delete -f svc-web.yaml service "web" deleted [root@k8smaster ~]# kubectl delete -f dep-whoami.yaml deployment.apps "whoami" deleted

Deployment工作负载 Deployment是最常用的K8s工作负载控制器(Workload Controllers),是K8s的一个抽象概念,用于更高级层次对象,部署和管理Pod。其他控制器还有DaemonSet、StatefulSet等。

Deployment的主要功能:

管理Pod和ReplicaSet

具有上线部署、副本设定、滚动升级、回滚等功能

生命周期管理 # 应用程序 ->部署 -> 升级 -> 回滚 -> 下线 # 部署Nginx1.17版本镜像 [root@k8smaster ~]# kubectl create deployment web --image=nginx:1.17 --replicas=3 deployment.apps/nginx1 created # 应用升级(更新镜像三种方式,自动触发滚动升级) 1. kubectl apply -f xxx.yaml 2. [root@k8smaster ~]# kubectl set image deployment/nginx nginx=nginx:1.24 3. kubectl edit deployment/web #使用系统编辑器打开 # 滚动升级需要1个Deployment和2个ReplicaSet,每次升级一小部分pod [root@k8smaster ~]# kubectl get rs NAME DESIRED CURRENT READY AGE nginx-69d846b5c6 0 0 0 23h nginx-7b8df77865 3 3 3 23h nginx-7df54cf9fd 0 0 0 23h # 回滚,查看发布历史 [root@k8smaster ~]# kubectl rollout history deployment nginx deployment.apps/nginx REVISION CHANGE-CAUSE 1 <none> 2 <none> 3 <none> # 执行回滚到第一版本 [root@k8smaster ~]# kubectl rollout undo deployment/nginx --to-revision=1 deployment.apps/nginx rolled back # 执行回滚 [root@k8smaster ~]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-7b8df77865-hqwd4 0/1 Terminating 0 23h nginx-7b8df77865-jqskz 1/1 Running 0 3h nginx-7b8df77865-klxkh 1/1 Running 0 23h nginx-7df54cf9fd-qjz52 0/1 ContainerCreating 0 21s nginx-7df54cf9fd-vcl5k 1/1 Running 0 21s # 查看版本号 [root@k8smaster ~]# kubectl describe $(kubectl get rs -o name) |egrep "revision:|Image" deployment.kubernetes.io/revision: 2 Image: nginx:1.24 deployment.kubernetes.io/revision: 3 Image: nginx:1.25 deployment.kubernetes.io/revision: 4 Image: nginx:1.17 deployment.kubernetes.io/revision: 5 Image: nginx:1.27

Pod基本概念 Pod的意义 Pod主要用法:

运行单个容器:最常见的用法,在这种情况下,可以将Pod看做是单个容器的抽象封装

运行多个容器:边车模式(Sidecar) ,通过在Pod中定义专门容器,来执行主业务容器需要的辅助工作,这样好处是将辅助功能同主业务容器解耦,实现独立发布和能力重用。

资源共享实现机制

共享网络:将业务容器网络加入到“负责网络的容器”实现网络共享

共享存储:容器通过数据卷共享数据

# 共享网络 apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: pod-net-test name: pod-net-test spec: containers: - image: busybox imagePullPolicy: IfNotPresent name: test command: ["/bin/sh", "-c", "sleep 12h"] - image: nginx imagePullPolicy: IfNotPresent name: web dnsPolicy: ClusterFirst restartPolicy: Always # 查看网络资源,在busybox中依然能看到80端口开启 [root@k8smaster ~]# kubectl exec -it pod-net-test -c test -- /bin/sh / # netstat -tlnp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN - tcp 0 0 :::80 :::* LISTEN - # 共享存储 apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: pod-net-test name: pod-net-test spec: containers: - image: busybox imagePullPolicy: IfNotPresent name: test command: ["/bin/sh", "-c", "sleep 12h"] volumeMounts: - name: log mountPath: /data - image: nginx imagePullPolicy: IfNotPresent name: web volumeMounts: - name: log mountPath: /usr/share/nginx/html volumes: - name: log emptyDir: {} dnsPolicy: ClusterFirst restartPolicy: Always # 验证 [root@k8smaster ~]# kubectl apply -f pod-net-test.yaml pod/pod-net-test created [root@k8smaster ~]# kubectl exec -it pod-net-test -c test -- touch /data/NewType [root@k8smaster ~]# kubectl exec -it pod-net-test -c web -- ls /usr/share/nginx/html/ NewType

容器管理命令 # 创建Pod: kubectl apply -f pod.yaml 或者使用命令:kubectl run nginx --image=nginx # 查看Pod: kubectl get pods kubectl describe pod <Pod名称> # 查看日志: kubectl logs <Pod名称> [-c CONTAINER] kubectl logs <Pod名称> [-c CONTAINER] -f # 进入容器终端: kubectl exec -it <Pod名称> [-c CONTAINER] -- bash # 删除Pod: kubectl delete pod <Pod名称>

POD健康检查 重启策略(restartPolicy):

健康检查有以下3种类型:

livenessProbe(存活检查):如果检查失败,将杀死容器,根据Pod

readinessProbe(就绪检查):如果检查失败,Kubernetes会把

startupProbe(启动检查):检查成功才由存活检查接手,用于保护

三种检查方法:

httpGet:发送HTTP请求,返回200-400范围状态码为成功。

exec:执行Shell命令返回状态码是0为成功。

tcpSocket:发起TCP Socket建立成功。# 创建deployment apiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: nginx name: nginx spec: replicas: 3 selector: matchLabels: app: nginx strategy: {} template: metadata: creationTimestamp: null labels: app: nginx spec: containers: - image: nginx:1.17 imagePullPolicy: IfNotPresent name: nginx livenessProbe: # 存活检查 httpGet: path: / port: 80 initialDelaySeconds: 5 periodSeconds: 10 readinessProbe: # 就绪检查 httpGet: path: / port: 80 initialDelaySeconds: 5 periodSeconds: 10 # 创建deployment kubectl apply -f dep-nginx.yaml # 创建service kubectl expose deployment nginx --name=web --port=80 --target-port=80 --type=NodePort # 制造故障 kubectl exec -it nginx-6dc9f95546-76jtp -- rm /usr/share/nginx/html/index.html kubectl exec -it nginx-6dc9f95546-ffncf -- rm /usr/share/nginx/html/index.html kubectl exec -it nginx-6dc9f95546-lwz59 -- rm /usr/share/nginx/html/index.html # 查看pod重启 [root@k8smaster ~]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-6dc9f95546-76jtp 1/1 Running 2 (3m13s ago) 11m nginx-6dc9f95546-ffncf 1/1 Running 2 (3m2s ago) 11m nginx-6dc9f95546-lwz59 1/1 Running 2 (3m3s ago) 11m

调度

资源限制 容器资源限制值:

resources.limits.cpu

resources.limits.memory

容器使用的最小资源请求值,作为容器调度时资源分配的依据:

resources.requests.cpu

resources.requests.memory

apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: my-pod name: my-pod spec: containers: - image: nginx name: my-pod imagePullPolicy: IfNotPresent resources: requests: memory: "64Mi" cpu: "500m" limits: memory: "128Mi" cpu: "1000m"

nodeSelector & nodeAffinity nodeSelector:用于将Pod调度到匹配Label的Node上,如果没有匹配的标签会调度失败。

约束Pod到特定的节点运行

完全匹配节点标签

专用节点:根据业务线将Node分组管理配备

特殊硬件:部分Node配有SSD硬盘、GPU

[root@k8smaster ~]# kubectl label nodes k8snode2 disktype=ssd node/k8snode2 labeled [root@k8smaster ~]# kubectl get nodes --show-labels NAME STATUS ROLES AGE VERSION LABELS k8snode2 Ready <none> 7d21h v1.27.6 disktype=ssd [root@k8smaster ~]# cat my-pod.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: my-pod name: my-pod spec: nodeSelector: disktype: "ssd" containers: - image: nginx name: my-pod imagePullPolicy: IfNotPresent [root@k8smaster ~]# kubectl get pod -o wide my-pod 0/1 ContainerCreating 0 7s k8snode2

升级 版本号查询 # 查询可以升级的kubectl、kubeadmin、kubelet dnf --showduplicates list kubelet Last metadata expiration check: 0:04:18 ago on Mon 22 Jan 2024 06:07:07 PM CST. Installed Packages kubelet.x86_64 1.27.6-0 @kubernetes Available Packages …… kubelet.x86_64 1.28.0-0 kubernetes kubelet.x86_64 1.28.1-0 kubernetes kubelet.x86_64 1.28.2-0 kubernetes

解除封锁 [root@master ~]# dnf versionlock delete kubeadm kubectl kubelet Last metadata expiration check: 0:02:56 ago on Mon 22 Jan 2024 06:43:30 PM CST. Deleting versionlock for: kubeadm-0:1.27.6-0.* Deleting versionlock for: kubectl-0:1.27.6-0.* Deleting versionlock for: kubelet-0:1.27.6-0.* [root@master ~]# dnf install -y kubeadm kubectl kubelet

查询升级计划 # 我们使用1.28.2版本 [root@masters ~]# kubeadm upgrade plan [upgrade/config] Making sure the configuration is correct: [upgrade/config] Reading configuration from the cluster... [upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [preflight] Running pre-flight checks. [upgrade] Running cluster health checks [upgrade] Fetching available versions to upgrade to [upgrade/versions] Cluster version: v1.27.6 [upgrade/versions] kubeadm version: v1.28.2 I0122 18:47:20.390636 22564 version.go:256] remote version is much newer: v1.29.1; falling back to: stable-1.28 [upgrade/versions] Target version: v1.28.6 [upgrade/versions] Latest version in the v1.27 series: v1.27.10 Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply': COMPONENT CURRENT TARGET kubelet 2 x v1.27.6 v1.27.10 Upgrade to the latest version in the v1.27 series: COMPONENT CURRENT TARGET kube-apiserver v1.27.6 v1.27.10 kube-controller-manager v1.27.6 v1.27.10 kube-scheduler v1.27.6 v1.27.10 kube-proxy v1.27.6 v1.27.10 CoreDNS v1.10.1 v1.10.1 etcd 3.5.7-0 3.5.9-0 You can now apply the upgrade by executing the following command: kubeadm upgrade apply v1.27.10 ___________________________________________________________________ Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply': COMPONENT CURRENT TARGET kubelet 2 x v1.27.6 v1.28.6 Upgrade to the latest stable version: COMPONENT CURRENT TARGET kube-apiserver v1.27.6 v1.28.6 kube-controller-manager v1.27.6 v1.28.6 kube-scheduler v1.27.6 v1.28.6 kube-proxy v1.27.6 v1.28.6 CoreDNS v1.10.1 v1.10.1 etcd 3.5.7-0 3.5.9-0 You can now apply the upgrade by executing the following command: kubeadm upgrade apply v1.28.6 Note: Before you can perform this upgrade, you have to update kubeadm to v1.28.6. ___________________________________________________________________ The table below shows the current state of component configs as understood by this version of kubeadm. Configs that have a "yes" mark in the "MANUAL UPGRADE REQUIRED" column require manual config upgrade or resetting to kubeadm defaults before a successful upgrade can be performed. The version to manually upgrade to is denoted in the "PREFERRED VERSION" column. API GROUP CURRENT VERSION PREFERRED VERSION MANUAL UPGRADE REQUIRED kubeproxy.config.k8s.io v1alpha1 v1alpha1 no kubelet.config.k8s.io v1beta1 v1beta1 no ___________________________________________________________________ # 下载升级镜像 [root@master ~]# kubeadm config images pull --cri-socket unix:///var/run/cri-dockerd.sock --kubernetes-version v1.28.2 --image-repository=registry.aliyuncs.com/google_containers

执行升级 # 将master设置为维护模式 [root@master ~]# kubectl drain masters --ignore-daemonsets node/masters cordoned Warning: ignoring DaemonSet-managed Pods: kube-system/calico-node-ns4cs, kube-system/kube-proxy-rvk5g evicting pod kube-system/coredns-7bdc4cb885-vnnqc evicting pod kube-system/calico-kube-controllers-6c99c8747f-z5rmt evicting pod kube-system/coredns-7bdc4cb885-7g7gw pod/calico-kube-controllers-6c99c8747f-z5rmt evicted pod/coredns-7bdc4cb885-vnnqc evicted pod/coredns-7bdc4cb885-7g7gw evicted node/masters drained [root@masters ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION masters Ready,SchedulingDisabled control-plane 17m v1.27.6 workers Ready <none> 16m v1.27.6 [root@masters ~]# kubeadm upgrade apply v1.28.2 --v=5 upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.28.2". Enjoy! [upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so. root@masters ~]# kubectl uncordon masters node/masters uncordoned [root@masters ~]# systemctl daemon-reload [root@masters ~]# systemctl restart kubelet.service [root@masters ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION masters Ready control-plane 27m v1.28.2 workers Ready <none> 26m v1.27.6 # 在工作节点上执行 [root@workers ~]# dnf install kubeadm-1.28.2-0 kubectl-1.28.2-0 kubelet-1.28.2-0 [root@workers ~]# systemctl daemon-reload ; systemctl restart kubelet.service # 设置工作节点进入维护模式 [root@masters ~]# kubectl drain workers --ignore-daemonsets [root@masters ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION masters Ready control-plane 31m v1.28.2 workers Ready,SchedulingDisabled <none> 30m v1.28.2 [root@masters ~]# kubeadm upgrade nodes [root@masters ~]# kubectl uncordon workers node/workers uncordoned [root@masters ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION masters Ready control-plane 32m v1.28.2 workers Ready <none> 30m v1.28.2 # 锁定版本号 [root@masters ~]# dnf versionlock kubeadm kubelet kubectl Last metadata expiration check: 0:26:22 ago on Mon 22 Jan 2024 06:37:44 PM CST. Adding versionlock on: kubeadm-0:1.28.2-0.* Adding versionlock on: kubelet-0:1.28.2-0.* Adding versionlock on: kubectl-0:1.28.2-0.*